AI Expertise

Applied Artificial Intelligence

Leveraging a comprehensive understanding of AI paradigms, from foundational concepts to advanced Reinforcement Learning techniques, implemented using frameworks like PyTorch.

My focus lies in applying AI, particularly RL, to solve complex real-world problems, bridging the gap between theoretical knowledge and practical application.

AI Concepts & Tools

Possessing a solid theoretical and practical foundation across the spectrum of Artificial Intelligence and Machine Learning.

- Supervised Learning: Understanding and application of algorithms for classification and regression tasks using labeled data.

- Unsupervised Learning: Knowledge of techniques like clustering and dimensionality reduction to find patterns in unlabeled data.

- Reinforcement Learning (RL): In-depth understanding of RL principles, including Markov Decision Processes (MDPs), value functions, policy gradients, and various learning algorithms (Q-learning, PPO, SAC etc.).

- Learning frameworks: Hands-on experience using the PyTorch and TensorFlow frameworks for developing, training, and deploying deep learning models, particularly in the context of RL.

Frameworks I Use

PyTorch

TensorFlow

scikit-learn

OpenCV

Reinforcement Learning Specialization

My primary area of specialization within AI is Reinforcement Learning. I am particularly drawn to RL because its trial-and-error learning process closely mirrors how learning occurs in the natural world. This paradigm allows agents to learn optimal behaviors directly from interaction with an environment, making it highly suitable for complex control tasks, robotics, and decision-making problems where explicit programming is difficult or impossible.

My focus has been on applying RL to challenging domains, leveraging its power to discover novel strategies and adapt to dynamic conditions.

Project on Locomotion

Within my master thesis I worked on the integration of a variable stiffness locomotion policy on the GO2 humanoid robot. This work was published at IFAC and is available for download here. This approach applies reinforcement learning to train an AI agent to walk. In addition to the previous approaches which learn to predict the joint positions, we trained the agent to learn the joint stiffnesses. Normally, the stiffness of the joints is tuned manually, which can be time consuming and not optimal. The approach aims to automate the adjustment of motor stiffness according to task requirements, eliminating the need for manual tuning.

Key Results

- Velocity tracking: Our method enabled more accurate velocity tracking than position based approaches (baselines).

- Push recovery: Our method outperformed the baselines showing robust learned gait.

- Energy efficiency: While showing competitive results with low stiffness policies it outperforms high stiffness policies.

The AI side of robotics is evolving quickly, opening up exciting possibilities—from autonomous vehicles to smart assistants and beyond. My work has focused on the integration of these two areas, combining control theory with the flexibility of AI. It's a space I'm deeply passionate about, and one where I bring both solid experience and an eagerness to explore emerging frontiers. My previous work includes manipulation as well as locomotion.

Project on Computer Vision (WILD-CAT)

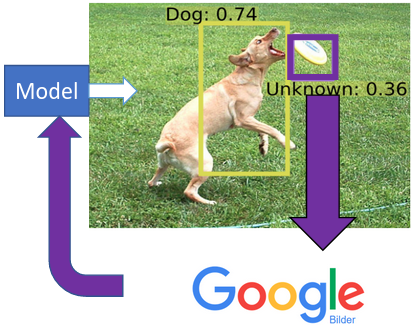

Given the limitation of traditional object detectors only recognise a fixed set of classes, I leveraged a new AI transformer architecture (CAT) which was able to detect unknown classes. I combined this capability with the extended knowledge of the Google Cloud AI and Microsoft Azure services to label the found classes. This way the unknown objects are sent to these cloud services to label a new dataset, which then can be used to train the existing transformer.

The set of classes the detector recognises is therefore iteratively extended. This extension is called Web-Integrated LoCalization and Detection of Unknown Objects with CAT (WILD-CAT)

- Detection of unknowns: WILD-CAT effectively detects objects it has never encountered before nor it was trained to detect.

- Experimental Results: The approach is validated by experimental results successfully labeling the unknown instances.